Conscious and Preconscious processing: the case of dementia

- ntyler31

- Aug 24, 2025

- 17 min read

UCL PEARL Working Paper August 2025

Nick Tyler

I look at the world, and thus dementia (and other!) situations from a combination of music and engineering perspectives.

On the music side, a musician takes their learning and experience on both technical and artistic grounds and applies these to create a new interpretation in the context of the given surroundings of the performance space. Each performance is an original, even if the musician has performed the piece of music in question many times before: it is always repeating, but never just repeated, and each performance is a new unique experience. The performance consists of the previously interpreted ideas, based on that previous artistic and technical experience, combined with the current world of other musicians, audience, performance space acoustics etc. to create the unique performance on this occasion.

In addition, the musician may try new ideas within the performance, taking advantage of the uniqueness of the surroundings at that moment. Some of these might succeed and pass into the battery of updated experience; some might fail and pass into the battery of updated experience. Both together create an impulsion for future performance. It is this live creativity in the moment that gives live performance its life and creates the atmosphere of ultimate creating rather than repeating. It is perhaps most obviously understood in improvisations, such as in jazz (or, as in my case, in the performance of 18thcentury baroque music), but it is the case even in performances of music that is highly scripted. The printed music is just a code created by a composer for a performer to recreate in the circumstances of the live concert. The music only actually exists in the moment of that performance.

On the engineering side, we are talking about a complex system where the properties of the system only emerge as the system elements interact with each other. Thus the properties of the system cannot be predicted, even though the properties and capabilities of the elements in the system and the linkages between them are well known. For example, the weather, if viewed as a system, has well-known relationships between pressure, temperature and time, but we have very poor knowledge of the initial conditions that pertain to any instant, so we do not know the precise values of any element of the system at any moment. However, by calculating all the possibilities for initial conditions, the deterministic nature of the system means that we can begin to see what might be more likely to happen in a particular place at a particular time. We cannot be certain about the precise values, but we can have a reasonable idea. We can also see that for many situations, the range of possible values of the initial conditions yield very little change in predicted outcome, whereas in some situations a tiny change in one initial condition could yield a massive change in outcome at another location. In this engineering case we have to create a system which can function with such uncertainty in a way that we can predict well enough to function within it.

So both music and engineering have a sense of determinism (the printed notes in music, the relationships between the elements of the system in engineering), but both also have a sense of the unknown (the creativity in performance, the not-random-randomness in a complex system). This is how I think of the process of a person creating a reaction to the environment. Whether we are talking about creating a musical performance or an engineered system, we are talking of first creating a perception, a model of the world ‘as we perceive it’: “have the sound in your head before you play, and then, when you play the sound is your direction”[1]. This perception is the reality on which we base our subsequent decisions. The music and engineering examples are just precise examples of the same process by which we determine what steps to take in order to survive in the complex environment in which we find ourselves.

This means that we need to see how we create this perception.

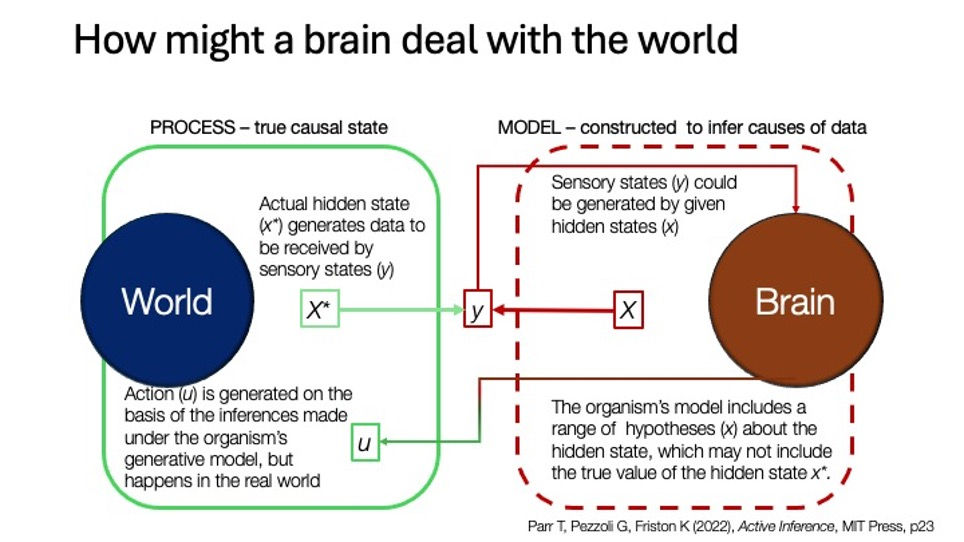

We create a model of the world which is based on the information we receive through our sensory pathways (see Figure 1). The key is to understand that there will be a difference between the model of the world as produced in the brain by this process, and the actual world, in other words, a “Sensory Prediction Error”. This error is produced in multiple parts of the process, including the sensors, sensorial pathways, the interpretation of the outputs and the interpretation of these to form the perception. The step forward in theoretical neurobiology[2] was to realise that the brain works to minimise the entropy (i.e. reduce the Sensory Prediction Error) of this system in order to maintain the body’s survival, and it is this entropy minimisation process that drives the process of creating the response. The brain is thus a predictive organ striving to correct its imperfect predictions and inferences. It is for this reason that the process is called Active Inference: we work on the basis that we continuously and actively infer the future and respond in a way to protect our survival.

We have observed this process occurring in PEARL[3], for example, when people felt that the building became warmer when we switched off the simulated sound of an air conditioning system, or when people in a train leant forward in preparation for the inertial forces of the deceleration process when they heard it slowing down and applying the brakes to arrive in a station, even though the train was not actually moving. In both cases, there was a Sensory Prediction Error. People perceived and reacted to the cooling effect of an air conditioning system, even though there wasn’t one, or to the effects of changes in motion even though they were not moving.

The Active Inference process is shown in Figure 1, where the process is characterised as a real process in the actual world (what is actually happening) and a modelled process in the brain based on the interpretation of incoming data stimulating a model of the world’s process. Figure 1 shows the process of generating a set of sensory states (Y) on the basis of hypotheses (X) generated in the brain resulting from sensory inputs via the sensory pathways, and which may or may not include the actual true state of the world (X*). These result in the brain instructing some action (U) which will usually take place in the real world. Our experiments in PEARL are conceived to obtain data about the sensory states (Y) as perceived by a person through hypotheses (X) (i.e. the physiological and neurological data) and conscious representations of these hypotheses (U) (represented by data from, for example, ‘scores’ recorded on a Likert scale slider and interview responses).

Figure 1 Active Inference process relating a real world to an estimated world in the brain

Figure 2 shows how the perception process is conceived following the latest thinking in theoretical neurobiology. In this model, Perception is constructed, starting with the Lived Experience of the individual (represented as something like “what happened last time I received data like this?”), supplemented by the incoming multisensory data arriving via the sensory pathways. There are many sensory pathways, some internal and some external. Typically, we think of 5 sensory pathways (vision, hearing, smell, touch, taste), but there are many more and these can affect the creation of a perception (you might perceive the world differently if you have a stomachache, for example).

Figure 2 Perception Process[4]

The brain’s response to the blend of Lived Experience and the multisensory data is a response, initially framed as an emotion, to which the action is possibly a preconscious reaction, for example in the form of a release of a stress hormone such as cortisol or adrenaline, or maybe more of a ‘reward’ such as dopamine. If of sufficient concern, this generates a conscious awareness to which the person reacts, for example “do what I did last time”, such as “run away”. I have described as an analogy for the preconscious process as a kind of “Symphony”, where the entirety of the experience of multiple instruments in the orchestra (inputs) is brought together into one perception, to compare with the “Sonata” in the case of Conscious Perception, where a much smaller scale of performance is involved, maybe only one or two instruments, but the experience is also to perceive a performance but the contributing inputs are much fewer/smaller and maybe easier to understand. Some specific details of the Symphony might be passed onto the Conscious Perception (maybe a particularly beautiful melody or something that is out of tune).

To put the conscious and preconscious processing of information into perspective, the brain’s capacity for processing data is around 11 million bits/sec, of which 80 bits/sec are allocated to conscious awareness[5]. The vast bulk of the ‘work’ is therefore preconscious and includes not just the five common senses (which are mainly exteroceptive) but also senses within the body (interoceptive senses) which contribute to the modification of the perception on the basis of current conditions within the body. Accordingly, a reaction from the Preconscious process might be thought of as “imagining” whereas the Conscious process might be a more specific “thinking” process. But these are just analogies!

What results from this process is a new Lived Experience, which is added to the previous Lived Experience and thus constitutes some form of the learning activity. This is achieved through a process of Impulsion, which, as John Dewey explains, is how one impact impacts repeatedly on another, increasing in strength over time[6]. This inclusion of highly individual sensorial inputs means that one person’s perception has minimal (although not zero) relation to that of another person.

The key point here is that everybody has a reality that is different from everyone else’s, because perception is so heavily based on Lived Experience. This is of course highly individual – every person on the planet has a different Lived Experience and so creates a different reality. This brings into question what we actually mean by “reality”.

Joseph Jastrow raised the question of reality in a fascinating article in the Popular Science Monthly in February 1899[7]. One of the illustrations he used became known as the duck-rabbit illusion, which he obtained from a German journal Fliegende Blätter (see Figure 3). Which is real, the duck or the rabbit? It depends on what moment you are referring to. Reality is made up in the mind, or what Jastrow called “The Mind’s Eye”. With perception being created based heavily on the Lived Experience of the perceiver, and everybody having a different Lived Experience, everybody creates their own reality and it is as flexible as the duck-rabbit.

Figure 3 The duck-rabbit illusion (Fliegende Blätter, 1892 Nr 2465, p147, https://doi.org/10.11588/diglit.2137#0147)

However, there does not seem to be a specific place in the brain where this ‘perception creation’ happens. Given that the brain is an enormous plasticising network of linkages between a large number of neurons, I am supposing that this happens within the network. It is a complex system, in which the emergent properties are only observable as they emerge from the interactions between the elements of the system (neurons in this case). The smart thing with this particular system is that the network reorganises itself on the basis of what happened last time in order to update the lived experience (this is one of the effects of the impulsions) to create a new one. Therefore, as with a complex system (e.g. the weather, or a transport network, or a piece of music) we might know something about the inputs and the outputs but we do not know what happens in between. But the system kind of works: we survive. Basing outcomes on Lived Experience keeps things more or less stable, even if they change. Adding in the incoming information through the multisensory pathways brings the possibility for change which ensures that we do not simply repeat what happened last time (unless the Lived Experience tells us that it was a good call). So the performance changes.

What does all this mean for people living with dementia?

I am surmising that one of the effects of the dementia condition is that either the neurons themselves, or the connecting network, might be failing, misfiring or misconnecting, but that, at this very basic level, the process assumes that everything is working normally. So a neuron failing to fire, or a network connection failing to connect, is taken at face value and the perception is created accordingly. As a result it could be different from the one we might have expected. This means that the update to the Lived Experience is changed and so a cycle of different perceptions is created. To the brain, the perception is the reality, so a person with such a problem would be creating a different reality from the one that they might have created had their neurons and network been working as before. In this situation, a person living with dementia, for example, would be living on the basis of a reality that may not coincide with the reality perceived by other people around them: they might be seeing the duck while others see the rabbit.

So the person living with dementia creates their own realities just like everyone else does. To distinguish between their realities and those of people whose perception processes are not affected in this way, I call these different realities “Extended Realities” because they are no less real than anyone else’s, but as they are based on similar inputs we can consider them to be an ‘extension’ of the realities being perceived by other people. To the person with an extended reality, their actions are entirely logical and follow directly from the reality they perceive, but to an observer they may seem irrational or odd. For example, the Extended Reality perceived by a person looking at an object might be that it is a cupboard, while an observer looking at the same object sees a refrigerator. The resulting action reveals the difference: putting clothes into a cupboard makes sense, putting them in a refrigerator might not. But each perception is as real as the other. It seems that it might be helpful to try and understand these extended realities so that we can begin to understand the logic behind how people, including people living with dementia, create their own realities.

What this means for research

Generally, analyses of relationships between a phenomenon in the environment and a person’s perception of it are conceived and run using larger data samples in order to reduce the effects of variability. This is the basis of conventional statistical analysis. The thinking behind this is that there is some expectation that there is “a value” (for example, an average value) that could be used to represent the set of phenomena involved in the relationship being studied, and an associated variance that ‘explains’ the inevitable fact that in a natural system there will be a natural variation in the data. However, this convention is actually quite new and only really came into use in the early twentieth century, when R.A. Fisher was attempting to work out the production rates of different seed/soil combinations[8]. He lit upon the Gaussian distribution as a good descriptor and used the term ‘normal[9]’ as a way of describing the importance of this distribution in this context[10]. This was a convenient way of summarising data so that it could be used for analysis, comparison, and calculation.

When we are considering personal reactions to a perception of some multisensorial phenomena (such as a cupboard), the perception generates the outcomes in a highly individual process, where each individual makes their own perception of the phenomenon of interest (a refrigerator or a cupboard). This is why aggregated data about perceptions do not reveal very much. Each individual has their own response to a phenomenon which can be quite different from that of another individual. Until now it has not been possible to obtain any data to examine this perception creation process, so there has been no generic research thus far in examining individual responses to stimuli. Individuality has been observed and modelled in the past but this has, until very recently, been a rarity. However, in PEARL we have been building on earlier work in my PhD[11], which examined the ‘changes of mind’ in delivering ‘engineering judgement’ in the course of designing infrastructure under conditions of poor data availability. This work involved modelling a series of several hundred decisions made under different circumstances by one engineer to create a model of that engineer’s decision-making process that could reproduce similar decision outcomes to those of the engineer when faced with the same project requirements. Although at the time this was being done I didn’t think of it in quite this way, now it seems that I was looking at how the engineer changed his mind on the basis of a combination of Lived Experience and the incoming data that he sensed, and created a perception about the phenomenon he was examining. Our recent work on this involves exploring the consequences for data resulting from recent work in the field of Theoretical Neurobiology and its results from examining the process of decision making at the level of the individual brain.

Of course, we cannot ‘see’ most of this process (indeed even the person concerned is unaware of most of it) and most of our understanding of what is going on is determined by knowing about the external environment that is delivering the multisensorial data and the reactions as observed in people. We believe ‘our’ perception to b real – but so is that of a person with dementia: it is just that we have created different realities. Until recently, the process between these two points has been a bit of a black box, but more recently it has been possible to peer inside some of the processes involved in this transition. This is what drove the design of PEARL – to be able to control the external environmental data to a knowable, consistent and repeatable extent so that we can then understand the brain’s responses better. The “multisensory information” element in Figure 2 is expanded in Figure 4 to disclose a little more of the process.

Figure 4 Perception process, expanding the multisensory data assimilation process

It is important to understand the Active Inference process here because it becomes clear that there is inherent error in the process. Of course, in this particular case it is not “error” as such, it is simply different takes on the presented data/information: they are each “correct” in the specific conditions of the individual person at the moment of being exposed to the stimuli, and represent a result from different stages of the whole process described in Figure 2. This is rather like travelling on a journey from A to B. The traveller knows that they left A and arrived at B. The traveller’s family at A knows what A is like and his hosts at B know what B is like, but which route the traveller took between them is known only to the traveller. What this research is trying to do is to understand more of the journey so that we can have a better understanding of it. This would enable us to design the journey so that the traveller could have a better experience along the way. Knowing about the different routes taken by different travellers could help us understand different options so that we can determine which routes provide the better experience including the end points for each traveller.

To do this, we use two forms of data. First, we consider the Conscious reactions. For example, we could ask each participant a pair of questions about their response to a given environment, for example, one about how pleasant they found the environment and the other about how stimulating they felt it was. We could require participants to use a slider to indicate for each question a value between 1 and 7 to record their assessment of the condition they had just experienced and then record this on a Likert scale. The second form of data could be, for example, physiological measurements such as galvanic skin response or heart rate variability. The significance of these two types of data is that the Slider data is a Conscious Reaction to the Conscious Perception and the physiological data indicates a preconscious Reaction to the Preconscious Perception.

This is shown diagrammatically in Figure 5, which is an adaptation of Figure 2 that shows how these data streams relate to the perception process. These data are quite different from each other in terms of both source and function and thus cannot be mixed. The feedback from Preconscious Reaction to Conscious Perception (how a preconscious reaction might affect a conscious perception and thus reaction) cannot be ascertained directly at this stage, but it might go some way to show how conscious reactions could be brought into line with preconscious reactions. Remember, the brain is trying to minimise the differences between the real world and its perception of it, and it will make some of these responses progress from a preconscious reaction into conscious perception and reaction in order to achieve that.

Inevitably, as stated earlier, as Perception is based ab initio on Lived Experience, every individual’s perception, both preconscious and conscious, will be unique to them, and any sense of finding some average ‘sweet spot’ that would suit everybody is a mirage.

How do we figure out how a perception turns into a reaction, whien this is such an idiosyncratic process?

Figure 5 Preconscious and conscious perception, with indications of the point at which data collection of preconscious and conscious data could be undertaken

Analysis methods

To try to resolve this problem, we can start by turning to a Random Forest algorithm, to look at the various inputs to see if we could detect important differences between the responses of individuals.

The Random Forest algorithm is a powerful machine learning method that works like a team of experts, making more accurate predictions than any single expert could alone. It operates by building many individual decision trees and then combining their results to arrive at a final decision. To ensure each tree is unique, the algorithm randomly selects a subset of the data and features to train each one. This intentional randomness prevents all the trees from making the same mistakes. When a new data point needs a prediction, every tree in the "forest" provides an answer. The final result is determined by a majority "vote" of all the trees, leading to a much more robust and reliable outcome.

Figure 6 A representation of a Random Forest Algorithm https://www.grammarly.com/blog/ai/what-is-random-forest/ (accessed 18 August 2025

Compared to a Linear Regression model, which is a simpler, traditional statistical model, Random Forest is a more modern and complex approach. It can automatically capture intricate non-linear patterns and interactions between features, making its predictive accuracy for complex data typically far superior.

However, a key drawback of Random Forest is its lack of inherent interpretability. It often acts as a "black box" where you know the final prediction but not intuitively why that prediction was made. This is where SHAP (SHapley Additive exPlanations) comes in. SHAP is a method to explain any machine learning model's output by assigning an importance value to each feature for a particular prediction. Shapley values were created to address the issue of a group of players participating in a collaborative game, where they work together to reach a certain payout. The payout of the game needs to be distributed among the players, who may have contributed differently[12]. Another example could be calculating the different contributions to a shared taxi fare, given the preferences of different passengers if they were not sharing with all (or any) of the others. Shapley values provide a mathematical method of fairly dividing the payout among the players or the fare contributions of the taxi passengers.

In our case, we could use the combination of Random Forest and SHAP to see the relative importance of different features of an environment as perceived by different participants. This would enable us to compare the actions of each participant with their perceptions. The SHAP analysis suggests a “Most important” feature in the decision so that we can begin to understand the outcome of the Random Forest analysis in a more interpretative way. We can do this for the whole range of cognitive capabilities, including dementia. By treating the perception process of people living with dementia in the same way as that of other people, we can begin to understand how the perception differences might be being processed and therefore begin to find a better way to discover how people with dementia feel about the environment around them.

Everything is ephemeral and we are just trying to see how the process might function to enable someone to create their perception at a moment in time. This is like the musician creating a performance or an engineer understanding a phenomenon in a complex system.

[1] The pianist Yuja Wang quoted one of her teachers saying this in “Arts in Motion 3”, BBC News, broadcast 14 December 2024. BBC

[2] I think one of the best explanations of this is Parr T, Pezzoli G, Friston K (2022) Active Inference, MIT Press

[3] Person-Environment-Activity Research Laboratory at UCL.

[4] Tyler N (2025) Transport System Transitions Lecture Notes

[5] Zimmerman, M. (1989) The Nervous System in the Context of Information Theory. In: Schmidt, R.F. and Thews, G., Eds., Human Physiology, Springer, Berlin, pp 166-173. https://doi.org/10.1007/978-3-642-73831-9_7

[6] Dewey J (1922) Human Nature and Conduct, Henry Holt and Company

[7] Jastrow J (1899) The Mind’s Eye, Popular Science Monthly, February, 54

[8] Fisher, R. A. 1926. The arrangement of field experiments. Journal of the Ministry of Agriculture. 33, pp. 503-515. https://doi.org/10.23637/rothamsted.8v61q

[9] The first use of the word ‘normal’ in the context of statistics was made by Galton in 1885 (Oxford English Dictionary s.v. normal II.10, https://www.oed.com/dictionary/normal_adj?tab=meaning_and_use#34178648, accessed 18 August 2025. Prior to this, in the technical context, from the 17th century the word was used to mean a right angle.

[10] Fisher, R. A. 1924. On a distribution yielding the error functions of several well known statistics. Proceedings International Mathematical Congress, Toronto. 2, pp. 805-813.

[11] Tyler N (1992) The use of artificial intelligence in the design of high capacity bus systems, PhD Thesis, University of London

[12] SHAP values are named after their creator, Lloyd Shapley, who first introduced them in 1953. Abridged from: Molnar C (2025) Interpreting Machine Learning Models With SHAP, https://leanpub.com/shap. Shapley and Roth were awarded the Nobel Prize in Economics in 2012 for their work in “market design” and “matching theory”

This is a fascinating take on how the brain models the world and reacts to sensory prediction errors. I especially liked the music and engineering analogies—they make the concept very clear. In real life, small mismatches between expectation and reality can have big effects, much like in logistics. Choosing the right freight forwarding company helps anticipate these variables and keep things running smoothly.